The news: Ahead of WWDC in just a few weeks, Apple previewed new accessibility features launching with iOS 16 and in other software updates later this year.

Here are the rundown of the new accessibility features:

- Door detection: This helps users locate doors and surrounding attributes, including any signs, whether it is open or closed and how far they are from one

- Apple Watch Mirroring: AirPlay your Apple Watch display to your iPhone in order to use iOS accessibility features like Voice Control or Switch Control, and even use external Made for iPhone switches

- Quick Actions on Apple Watch: “a double-pinch gesture can answer or end a phone call, dismiss a notification, take a photo, play or pause media in the Now Playing app, and start, pause, or resume a workout”

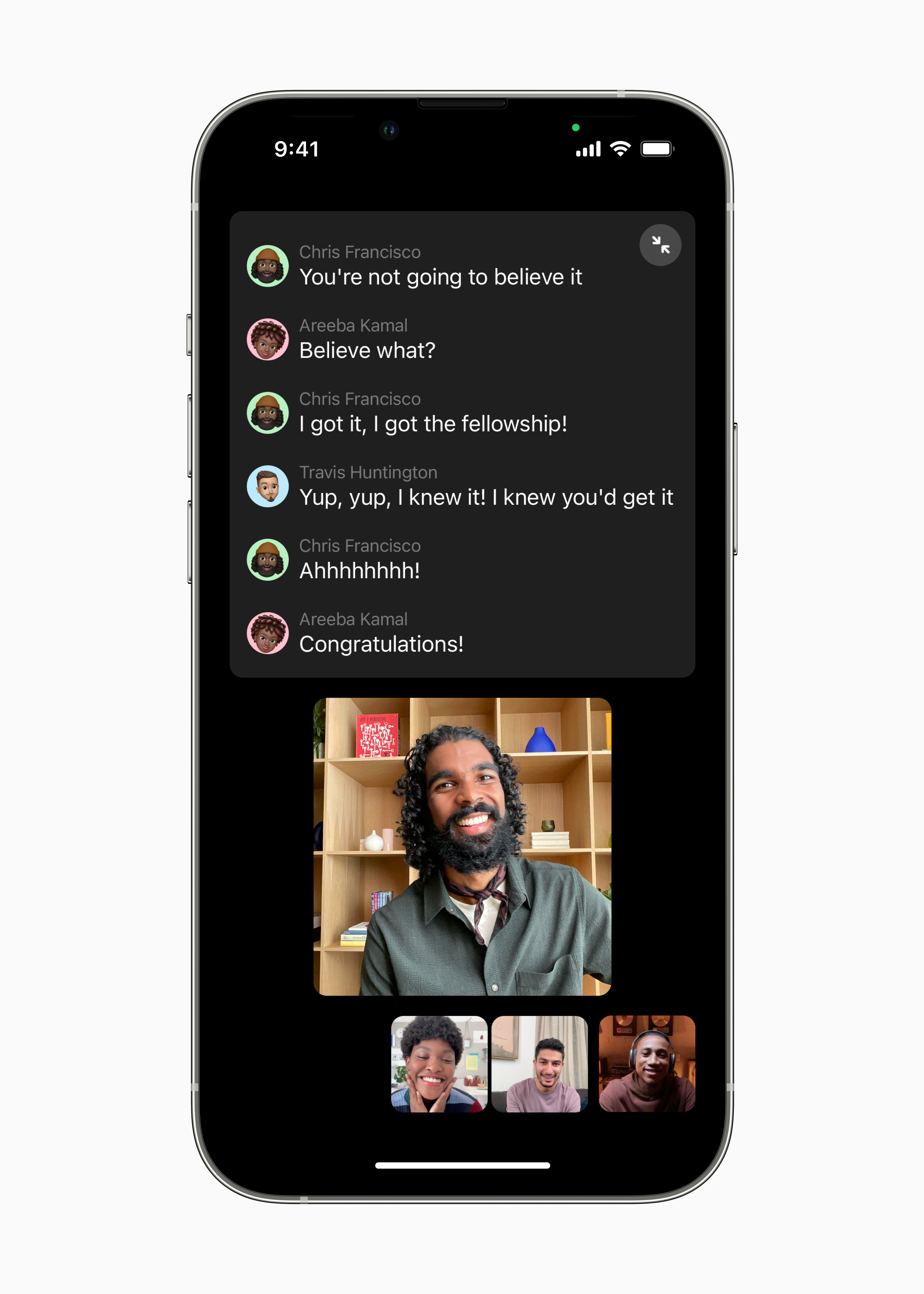

- Live Captions on iPhone, iPad and Mac: Live transcription of spoken words comes to media apps, FaceTime calls and more

- VoiceOver gains support for over 20 new locales and languages, like Bengali, Bulgarian, Catalan, Ukrainian, and Vietnamese

- Buddy Controller: someone can help you play video games by linking 2 controllers to a single input (this is super cool)

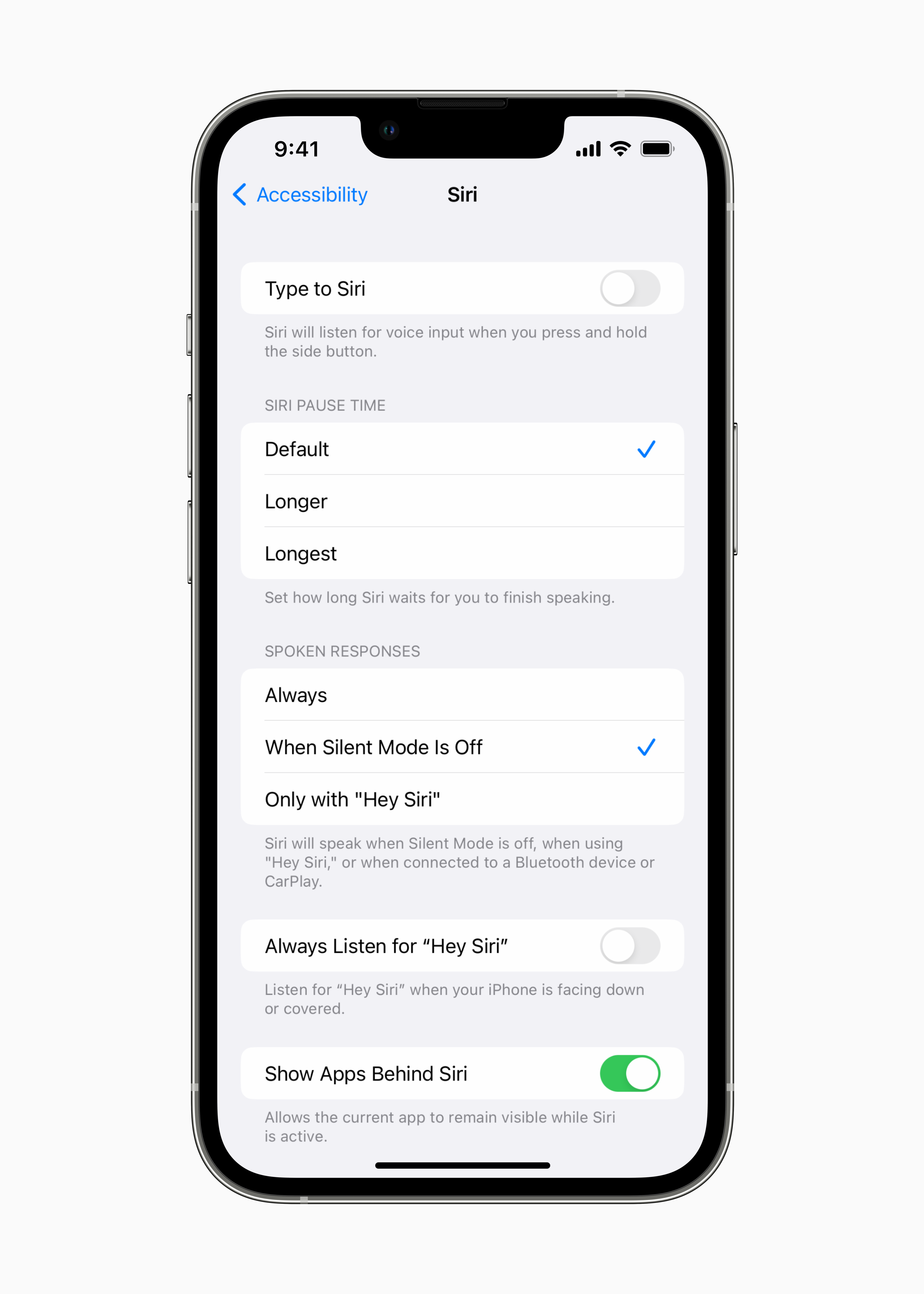

- Siri Pause time: “users with speech disabilities can adjust how long Siri waits before responding to a request”

- Voice Control Spelling Mode: vocalize specific spellings with per-letter input

- Sound Recognition expands to detect a “unique alarm, doorbell, or appliances”

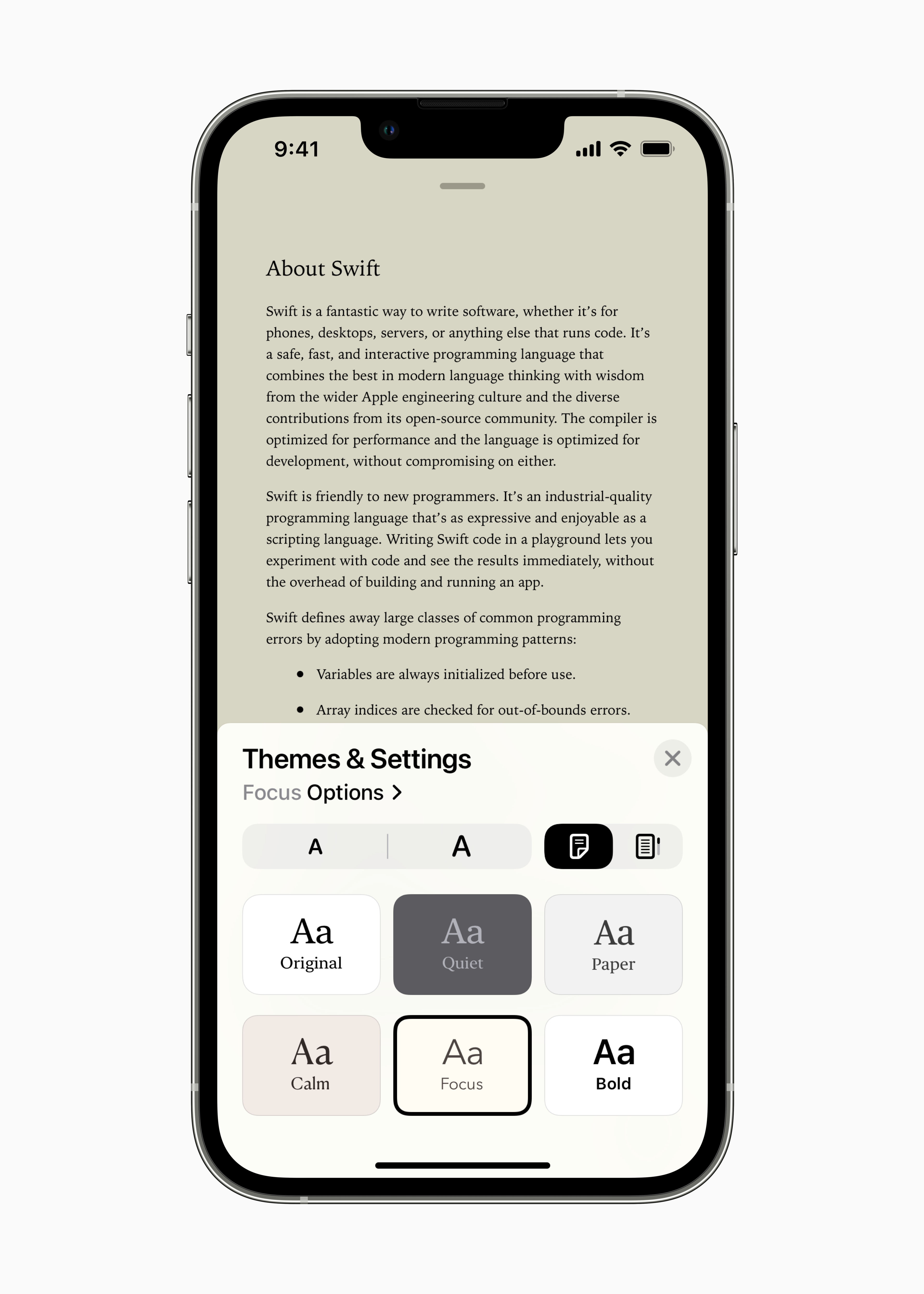

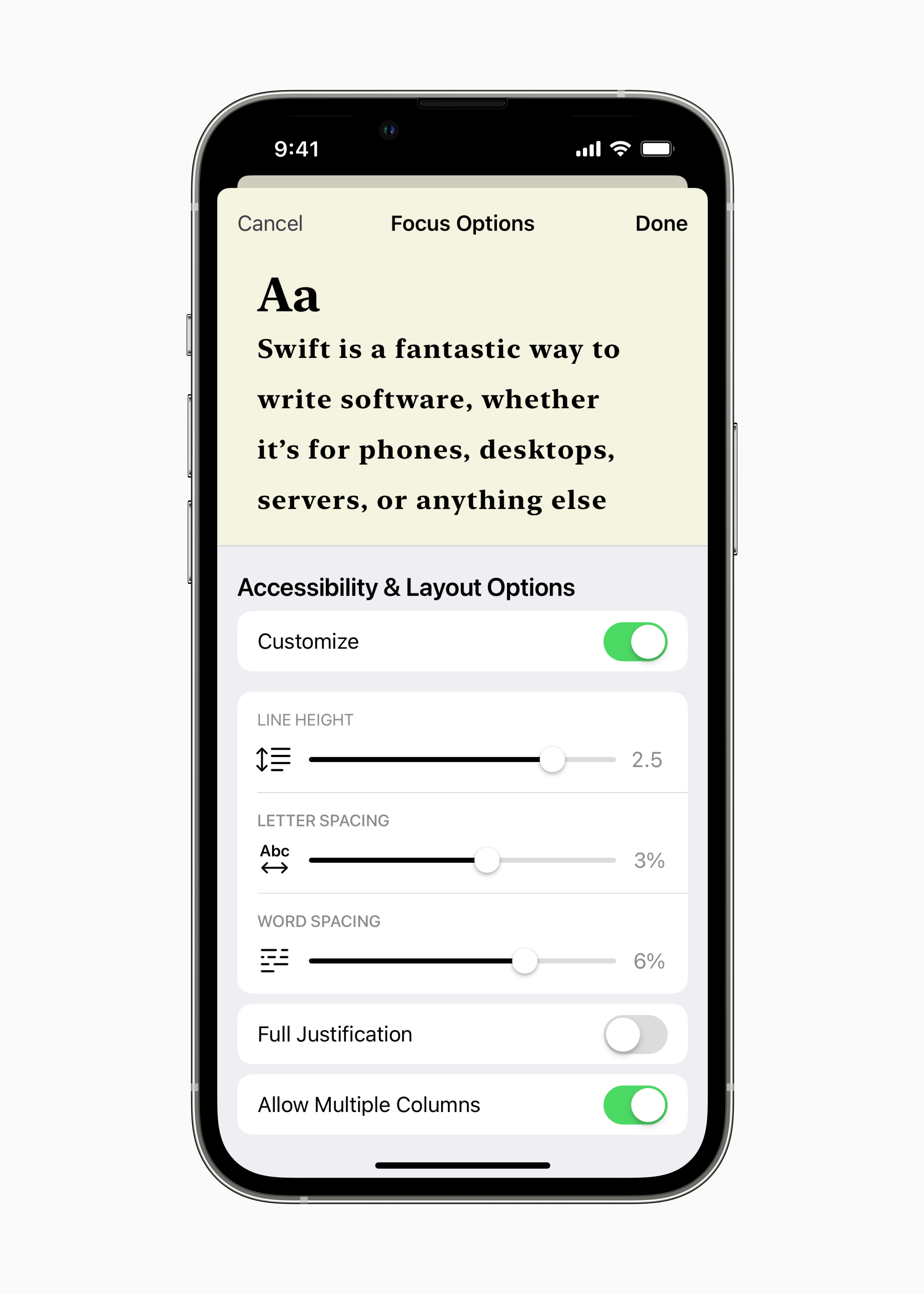

- Apple Books brings new themes and custom options such as word spacing for improved accessibility

Sam’s take: I do not see any other tech company in the world taking accessibility as seriously as Apple. These features seem to be incredible leaps forward for users who have accessibility needs…and it’s also consequently our first look at iOS 16. Why am I so certain of this? Apple did the same thing ahead of iOS 15 with a preview of new accessibility features last year.